Effective Branching Strategies in Development Teams

Introduction

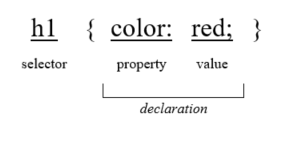

In a development environment, deciding on a suitable branching strategy is crucial. It enables teams to determine the codes running in production, reproduce bugs occurring in production, and manage commits for upcoming features or immediate production deployments. It’s a collective decision, tailored to suit the team's workflow and demands. Before diving deep into strategies, it's essential to understand branches in Git, which are pointers to a commit.

Branching Strategies

-

Branch Types

- Main Branch: All commits here are either in production or about to be deployed soon.

- Feature Branches: For new features, branching from the tip of the main, continually committed to until feature completion.

- Hotfix Branches: Created from the main branch for immediate production deployments to fix issues in production.

- Commit Management

When working on features taking several weeks, commits shouldn’t be included in any upcoming production deployments, and solutions should scale to multiple engineers working on multiple features.

- Approach to Production Deployment

After feature completion, commits are merged into the main branch through pull requests in CodeCommit, reviewed, and commented on by the team before being ready for deployment. Commits can be merged using Fast forward merge, Squash and merge, or 3-way merge, according to which suits the situation best.

- CI/CD Integration

Continuous Integration and Continuous Deployment (CI/CD) aid in testing code quality, deploying to staging environments, and eventually to production after the merger. It’s instrumental for both feature deployments and hotfix requirements.

Handling Merge Conflicts

When multiple individuals are merging to main, conflicts could occur, needing resolution by selecting from the overlapping changes. Continuous pushing of commits to the source branch is possible while the pull request is open, and it updates the pull request accordingly.

Hotfix Requirements

For issues found in production, such as security vulnerabilities, hotfix branches are created from the main branch, and after necessary fixes and thorough testing, they are merged back to main, and CI/CD deploys the hotfix.

Dealing with Diverged Branches

For branches diverged far from the main due to prolonged work on a feature, bringing the commits from the main into the feature branch by merging or rebasing is necessary. This may involve resolving merge conflicts and careful handling as it could rewrite history and impact those working on the same feature branch.

Conclusions

Branching is integral for effective development cycles, and the choice of strategy depends heavily on the team’s preferences, working style, and project requirements. Git offers powerful tools and options, making work with branching strategies intuitive over time.

Team Decisions and Collaborations

In every step, whether it’s allowing force push or resolving conflicts, the team’s collective decisions are crucial. Regular collaboration and communication among team members during pull requests and merges ensure smooth and efficient workflow, enhancing overall productivity.

Final Thoughts

The ideal branching strategy and related decisions are not one-size-fits-all and will depend on the team's specific needs, workflow, and the nature of the projects. It is crucial to have a clear understanding of Git functionalities and regular team interactions to choose and implement the most effective strategies, ensuring seamless and productive development cycles.

The described process not only aids in feature improvements and updates but also significantly contributes to resolving unforeseen production issues promptly and maintaining the security and integrity of applications. Keep exploring and adapting to find what works best for your team.